Blog Posts

Git Diff

Dec 4th, 2020Git Rebase

Nov 27th, 2020Git Rebase is a command used to reapply commits on top of another base tip. The base tip could be either another branch or the current branch. Rebase is useful in scenarios where we need to keep branches up to date with long lived branches or scenarios where we want to rework the history of a feature branch. In this week blog post we will look at different scenarios where Git Rebase is useful and understand the usage of the command with its parameters.

Kafka Log Compaction

Nov 20th, 2020Kakfa supports multiple log cleanup policy, delete or compact. When set to delete, log segments will be deleted when the size or time limit is reached. When compact is set, Kafka will ensure to keep at least the latest value of messages per message key. With log compaction setup on a Kafka topic, the topic becomes a database where messages are rows in database term, mutation of rows are done via messages where the last message received represents the latest state. In this post we will see how we can setup compaction and the different settings that affect its behaviour.

Kafka Offsets And Statistics

Nov 13th, 2020Offsets are a big part of Kafka. They indicate the position of a log in the partition of a topic, allowing consumers to know what to read, and from where to start. In today’s post we will look into how consumers manage the offset, store and commit them, and how brokers maintain them to allow failure to happen on a consumer group.

Kafka Topic Partition And Consumer Group

Nov 6th, 2020In the past posts, we’ve been looking at how Kafka could be setup via Docker and some specific aspect of a setup like Schema registry or Log compaction. We discussed broker, topic and partition without really digging into those elemetns. In this post, we will provide a definition for each important aspect of Kafka.

Kafka Schema Registry With Avro

Oct 30th, 2020In previous posts, we have seen how to setup Kafka locally and how to write a producer and consumer in dotnet. The topic on which producer produces messages and consumer consumes messages accepts messages of any type, hence an agreement needs to be made between producer and consumer on a contract so that whatever is being produced can be understood at consumption. In order to enforce that contract, it is common to use a Schema Registry. In this post, we will look at how we can setup and use a schema registry, and we will look at how we can create an Avro schema to enforce produced and consumed data.

Create Kafka Producer And Consumer In Dotnet And Python

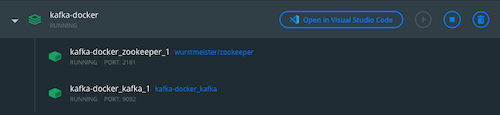

Oct 23rd, 2020Setup Local Kafka With Docker

Oct 16th, 2020When writing Kafka producer or consumer applications, we often have the need to setup a local Kafka cluster for debugging purposes. In this post, we will look how we can setup a local Kafka cluster within Docker, how we can make it accessible from our localhost and how we can use Kafkacat to setup a producer and consumer to test our setup.

Generate Fake Data In Python With Faker

Oct 9th, 2020Reducing repetition in codebase is a well understood concept in Software development. When writing features, we try to use existing functionalities so that we don’t duplicate similar logic. Surprisingly, this concept is often skipped when writing tests where we end up with a hundred over test cases with repeated construction of input objects to fit all possible scenarios being tested. In most languages (I haven’t checked all of them), developers have addressed such problem by providing ways to fake inputs. In today’s post, we will look into Python Faker package and how to use it to improve code reuse.

Angular Async Pipe

Oct 2nd, 2020The async pipe is used in Angular to unwrap a value from an asynchronous primitive. An asynchronous primitive can either be in a form of an observable or a promise. It is one of the most important pipes of Angular as it allows us to remove the complexity of handling subscription to data coming from asynchronous sources like HTTP clients. The code of the async pipe is surprisingly simple and understanding it will allow us to avoid traps like the default null value. So in today’s post, we will reimplement the async pipe in a toned down version of it.

Create Stored Procedure In Mysql

Sep 25th, 2020A stored procedure in MySQL acts as a method stored in the database. It has a name and accepts a set of arguments and can be invoked via CALL statement. In this post we will look at how we can define a stored procedure, how the parameters and variables work, and lastly how we can define transactions and handle exceptions accordingly.

Handle Polymorphic Array Serialization With Marshmallow

Sep 18th, 2020Few months ago we looked into Marshmallow, a Python serialisation and validation framework which can be used to translate Flask request data to SQLAlchemy model and vice versa. In today’s post we will look at how we can serialise an array containing polymorphic data.

Postgres Window Function

Sep 11th, 2020Window functions are calcultions done accross a set of rows in relation to the current row. In Postgres, we can use window functions with the keywords OVER to calculate useful aggregate functions like average, ranking or count over a partition of the data. In today’s post we will look at example of window function usage.

Ngrx Metareducer

Sep 4th, 2020When building Angular application with Ngrx, it is helpful to see the action flowing into our states for debugging. The quick and easy way to debug is to make use of the Redux DevTools which shows the list of actions and provide time travelling functionalities. Another way is to simply log the action, the state prior applying the action, and the resulting state. In Ngrx, we are able to do that using a Meta-reducer.

Postgres Full Text Search

Aug 28th, 2020In today’s post we will look at how PostgreSQL Full Text Search functionalities can be used to retrieved ranked documents. We’ll start by looking at the basics of matching documents, then we’ll move on to how we can define indexes for performance improvement and we’ll end by looking at weights and ranking.

chrome

chrome

cypress

cypress

dbeaver

dbeaver

ethereum

ethereum

figma

figma

flask

flask

git

git

graphql

graphql

jekyll

jekyll

jenkins

jenkins

js

js

maths

maths

metamask

metamask

mobx

mobx

mysql

mysql

oas

oas

postgres

postgres

postman

postman

puml

puml

python

python

solidity

solidity

splunk

splunk

sqlalchemy

sqlalchemy

sqlite

sqlite

typescript

typescript

unicode

unicode

vscode

vscode

zsh

zsh