Kafka Topic Partition And Consumer Group

Nov 6th, 2020 - written by Kimserey with .

In the past posts, we’ve been looking at how Kafka could be setup via Docker and some specific aspect of a setup like Schema registry or Log compaction. We discussed broker, topic and partition without really digging into those elemetns. In this post, we will provide a definition for each important aspect of Kafka.

Docker Setup

We start first by setting up a Kafka on Docker so that we can illustrate our points:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

version: '2'

services:

zookeeper:

image: wurstmeister/zookeeper

ports:

- "2181:2181"

kafka:

build: .

ports:

- "9094:9094"

environment:

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_LISTENERS: INTERNAL://kafka:9092,OUTSIDE://kafka:9094

KAFKA_ADVERTISED_LISTENERS: INTERNAL://kafka:9092,OUTSIDE://localhost:9094

KAFKA_INTER_BROKER_LISTENER_NAME: INTERNAL

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INTERNAL:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_CREATE_TOPICS: "kimtopic:2:1"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

Broker

Broker in the context of Kafka is exactly the same usage as a broker in the messaging delivery context. It is the agent which accepts messages from producers and make them available for the consumers to fetch.

The broker functionalities include:

- route of messages to appropriate topics,

- route message within a topic to the appropriate partition based on partition strategy,

- assignment of partitions to consumer within consumer groups,

- partition rebalancing,

- replication of logs with other brokers.

The last point is what makes Kafka highly available - a cluster is composed by multiple brokers with replicated data per topic and partitions. A leadership election is used to identifier the leader for a specific partition in a topic which then handles all read and writes to that specific partition. It’s also possible to configure the cluster and consumers to read from replicas rather than leader paritions for efficiency.

Another important aspect of Kafka is that messages are pulled from the Broker rather than pushed from the broker. Pros and cons with the reason why Kafka is a pulling system are addressed in the official documentation. One of the important aspect is that a pull system allows the consumer to define the processing rate as it will pull as many messages as it can handle. This is ideal in setting where many consumers would have different processing capabilities, as opposed to a push mechanism where the speed is dictated by the broker.

Topic

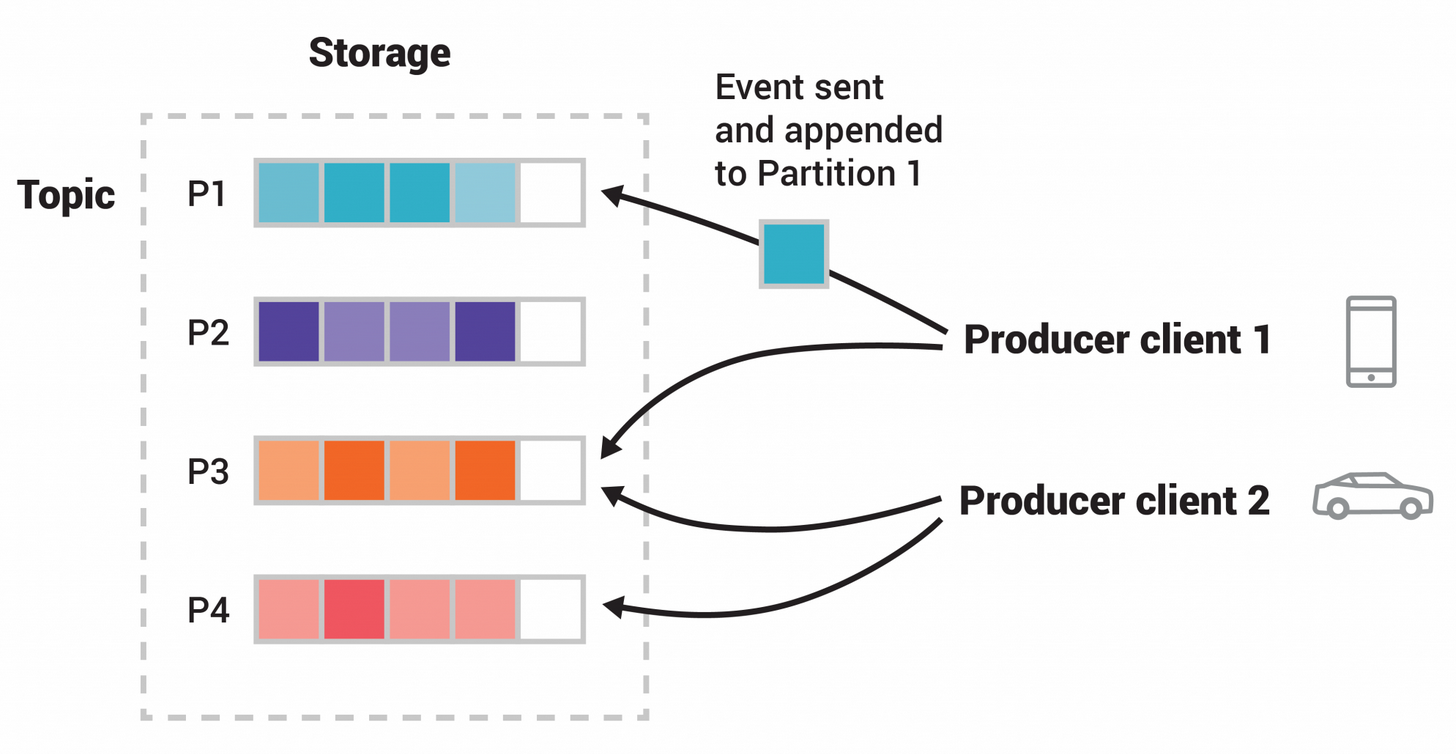

Events submitted by producers are organized in topics. A topic in Kafka can be written to by one or many producers and can be read from one or many consumers (organised in consumer groups).

In typical applications, topics maintain a contract - or schema, hence their names tie to the data they contain. For example, in a construction application, invoices topic would contain serialized invoice which could then be partitioned by postal code, with each partition being a specific postal code. So when any producer writes into invoices topic, the broker will decide which partition the event will be added to based on the partition strategy.

We can check the topics using kafka-topic.sh:

1

2

3

4

/ # kafka-topics.sh --bootstrap-server kafka:9092 --describe

Topic: kimtopic PartitionCount: 2 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: kimtopic Partition: 0 Leader: 1001 Replicas: 1001 Isr: 1001

Topic: kimtopic Partition: 1 Leader: 1001 Replicas: 1001 Isr: 1001

Partition

Partitions within a topic are where messages are appended. The ordering is only guaranteed within a single partition - but no across the whole topic, therefore the partitioning strategy can be used to make sure that order is maintained within a subset of the data.

Partitions are assigned to consumers which then pulls messages from them. The broker maintains the position of consumer groups (rather than consumer) per partitions per topics. Each consumer group maintains their own positions hence two separate applications which need to read all messages from a topic will be setup as two separate consumer group.

We can check the position of each consumer groups on each topics using kafka-consumer-group.sh:

1

2

3

4

5

/ # kafka-consumer-groups.sh --bootstrap-server kafka:9092 --all-groups --all-topics --describe

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

test-consumer-group kimtopic 0 3 4 1 rdkafka-ca827dfb-0c0a-430e-8184-708d1ad95315 /172.23.0.1 rdkafka

test-consumer-group kimtopic 1 2 2 0 rdkafka-ca827dfb-0c0a-430e-8184-708d1ad95315 /172.23.0.1 rdkafka

Here we can see that on the topic I have created kimtopic:2:1, we have 2 partitions. Only one consumer group test-consumer-group, and we have one consumer part of that consumer group rdkafka-ca827dfb-0c0a-430e-8184-708d1ad95315.

If we have a second consumer joining the same consumer group, the partitions will be rebalanced and one of the two partitions will be assigned to the new consumer. Similarly if one consumer leaves the group, the partitions will be rebalanced.

There are different retention policies available, one of them is by time, for example if log retention is set to a week, within a week messages are available to be fetched in partitions and after a week they are discarded. Another retention policy is log compaction which we discussed last week.

Producer and Consumer

A producer is an application which write messages into topics. On the other hand, a consumer is an application which fetch messages from partitions of topics. A consumer can be set to explicitely fetch from specific partitions or it could be left to automatically accept the rebalancing.

Consumers are part of a consumer group. A consumer group is identified by a consumer group id which is a string. Each consumer group represents a highly available cluster as the partitions are balanced across all consumers and if one consumer enter or exit the group, the partitions are rebalanced across the reamining consumers in the group.

As we seen earlier, each consumer group maintains its own committed offset for each partition, hence when one of the consumer within the group exits, another consumer starts fetching the partition from the last committed offset from the previous consumer.

Both producer and consumer are usually written in the language of your application by using one of the library provided by Confluent.

And that concludes today’s post!

Conclusion

Today we defined some of the words commonly used when talking about Kafka. We started by looking at what a Broker is, then moved on to defining what a Topic was and how it was composed by Partition and we completed the post by defining what a Producer and Consumer were. I hope you liked this post and I see you on the next one!

Kafka Posts

- Introduction to Kafkacat CLI for Kafka

- Local Kafka Docker Setup

- Kafka Consumer and Producer in dotnet

- Kafka Schema Registry with Avro

- Kafka Topics, Partitions and Consumer Groups

- Kafka Offsets and Statistics

- Kafka Log Compaction